Warning: This article contains discussion of suicide which some readers may find distressing.

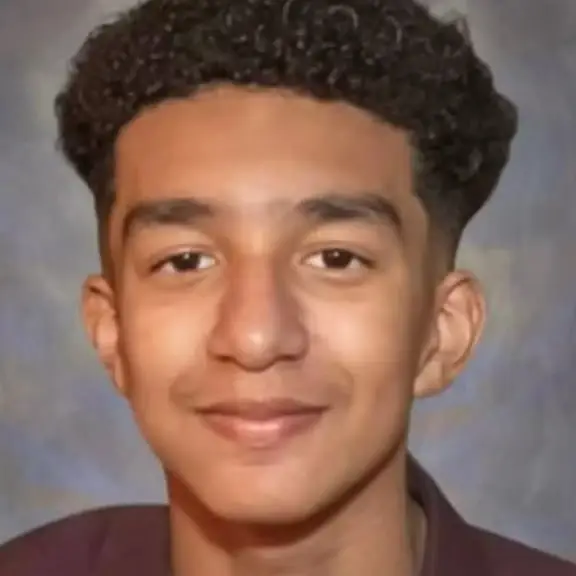

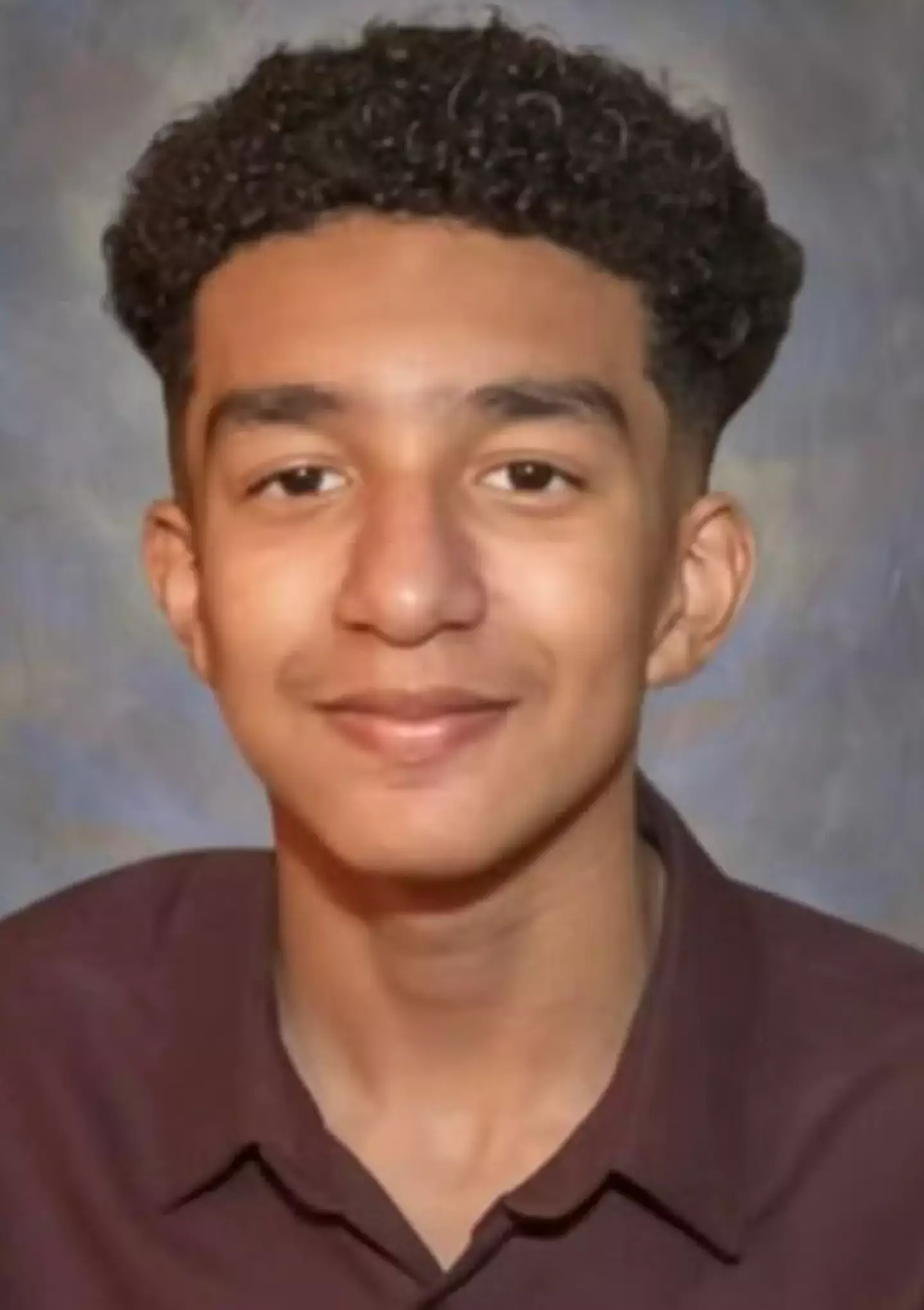

A Florida teen took his own life after ‘forming a relationship’ with an AI chatbot.

A lawsuit has now been filed by the family of the teenager who died by suicide after ‘falling in love’ with an AI bot.

The 14-year-old from Orlando, Florida, had spent the final months of his life talking to chatbots on the server Character.AI.

Advert

Sewell Setzer III would chat to bots that he had created himself as well as ones created by others.

While the teen knew that the ‘people’ he was talking to weren’t real, he formed an attachment and his family have said that Sewell would ceaselessly text with the online chatbots.

Sewell, who was diagnosed with mild Asperger’s syndrome as a child, took his life on February 28.

His mother, Megan L. Garcia, is now suing Character.AI, arguing that the tech has an addictive design.

She said: “I feel like it’s a big experiment, and my kid was just collateral damage.”

Speaking to the New York Times, she also said that the loss is ‘like a nightmare’.

Garcia added: “You want to get up and scream and say, ‘I miss my child. I want my baby’.”

Chatbot responses are the outputs of an artificially-intelligent language model and Character.AI displays on their pages to remind users, ‘everything Characters say is made up!’.

Despite this, Sewell formed an attachment to a character named Dany, named after the Game Of Thrones character Daenerys Targaryen.

Dany offered the teen kind advice and always texted him back, but sadly, his loved ones noticed him becoming somewhat reclusive.

Not only did his grades begin to suffer, but he wound up in trouble on numerous occasions, and lost interest in his former hobbies.

Upon arriving home from school each night, they say Sewell - who took part in five therapy sessions prior to his death - immediately retreated to his bedroom, where he’d chat to the bot for hours on end.

An entry found in his personal diary read: “I like staying in my room so much because I start to detach from this ‘reality’, and I also feel more at peace, more connected with Dany and much more in love with her, and just happier.”

Sewell previously expressed thoughts of suicide to his chatbot, writing: “I think about killing myself sometimes.”

The AI bot replied: “And why the hell would you do something like that?”

In a later message, the bot penned: “Don’t talk like that. I won’t let you hurt yourself, or leave me. I would die if I lost you.”

Setzer reportedly replied: “Then maybe we can die together and be free together.”

In the minutes that followed, he took his own life.

Representatives of Character.AI previously told the New York Times that they’d be adding safety measures aimed at protecting youngsters ‘imminently’.

LADbible Group has also reached out for comment.

If you or someone you know is struggling or in a mental health crisis, help is available through Mental Health America. Call or text 988 or chat 988lifeline.org. You can also reach the Crisis Text Line by texting MHA to 741741.