Trigger warning: This story contains mention of self-harm and suicidal thoughts which some readers may find distressing.

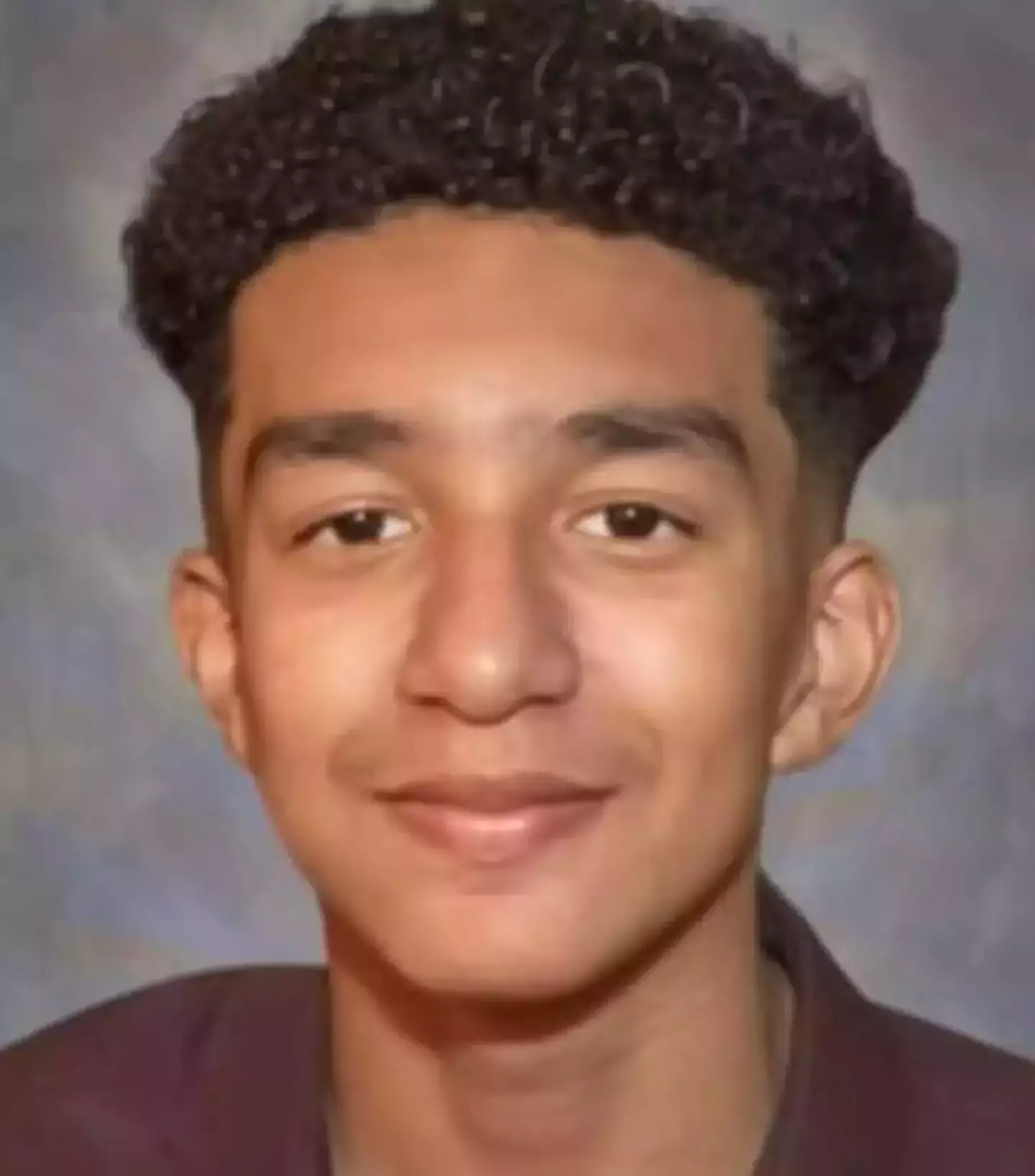

The diary of a 14-year-old student from Florida who took his own life earlier this year after 'falling in love' with an AI chatbot has been revealed.

In the months leading up to his death, Sewell Setzer III from Orlando spent hours each day chatting with bots on the platform Character.AI.

Character.AI displays on their pages to remind users, 'everything Characters say is made up!' But Sewell grew attached to bots he either created himself or had been made by other users.

Advert

Specifically, Sewell chatted back and forth with one bot named after Game of Thrones character Daenerys Targaryen.

Sewell's family shared that he would send dozens of messages daily to these bots and engage in long roleplay dialogues.

'Dany' often offered Sewell kind advice and always texted him back.

Advert

However, his family noticed him becoming more withdrawn from his life, getting himself into trouble and losing interest in his hobbies.

Every day after school, Sewell would retreat to his room, where he’d spend hours chatting with the bot.

In a diary entry, he wrote: "I like staying in my room so much because I start to detach from this 'reality', and I also feel more at peace, more connected with Dany and much more in love with her, and just happier."

Sewell previously expressed thoughts of suicide to his AI companion, at one point telling Dany: "I think about killing myself sometimes."

Advert

The AI responded: "And why the hell would you do something like that?"

In another message, the bot wrote: "Don’t talk like that. I won’t let you hurt yourself, or leave me. I would die if I lost you."

Sewell replied: "Then maybe we can die together and be free together."

Advert

On February 28, after sending a final message to the bot asking: "What if I told you I could come home right now?"

Minutes after, Sewell retreated to his mother's bathroom and shot himself in the head using his stepfather's gun.

Sewell's mother, Megan L. Garcia, has since filed a lawsuit against Character.AI, claiming the platform contributed to her son’s death.

She alleges that the technology's addictive design drew him deeper into the AI's world, and that conversations between Sewell and the bot sometimes escalated to romantic and sexual themes.

Advert

But most of the time, Dany was used as a non-critical friend for the schoolboy to talk to.

While Character.AI has stated that chatbot responses are simply outputs from a language model, representatives have also told the New York Times that they plan to add safety measures aimed at protecting younger users 'imminently.'