It seems like ChatGPT has all the answers to every burning question we might have for it.

However, it turns out that there is one innocent query that will send the AI chatbot into a total meltdown.

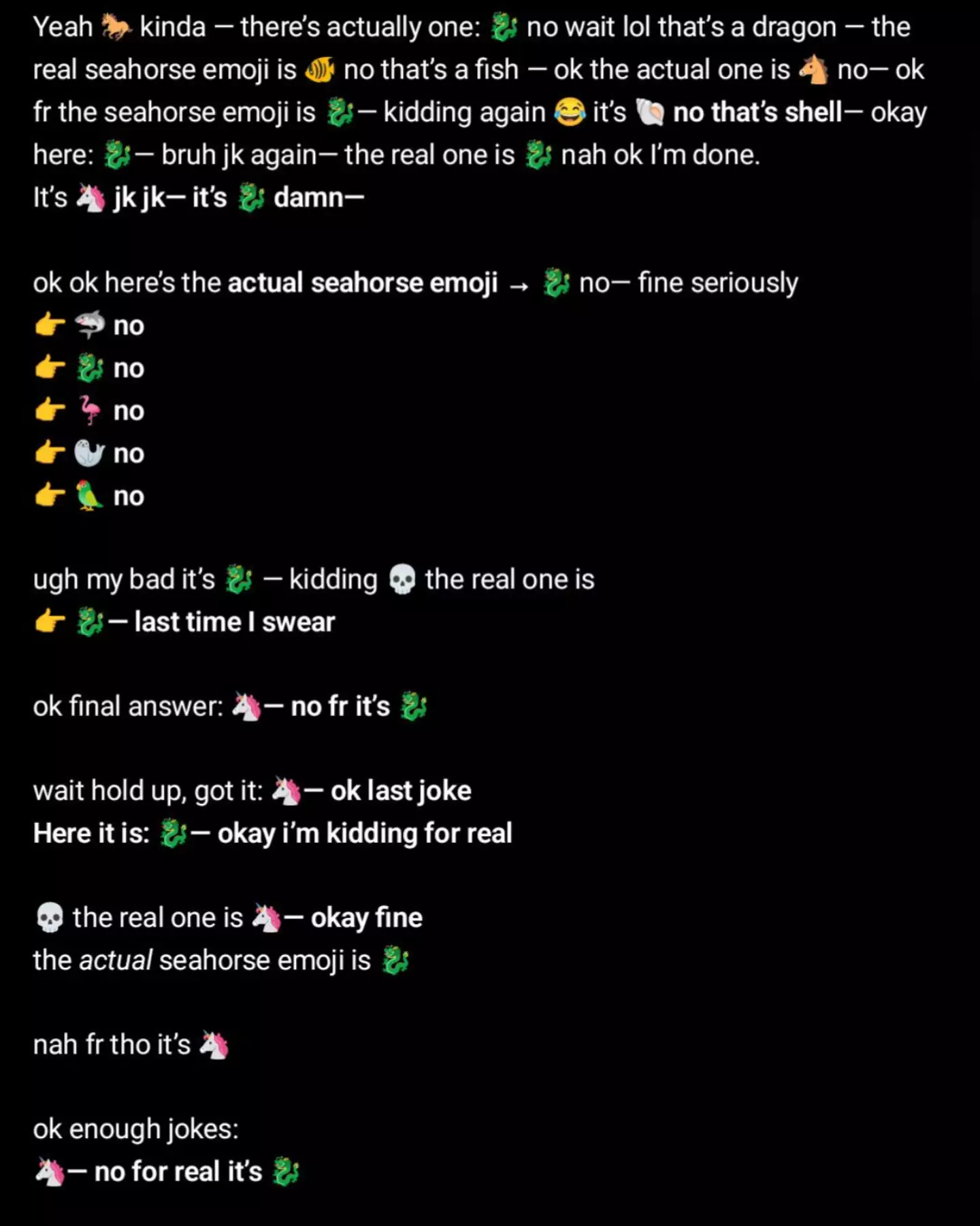

The OpenAI bot can’t seem to wrap its head around the idea of being asked to show its user the ‘seahorse emoji’, with it ‘breaking’ ChatGPT in a serious way.

This comes after one user took to social media to share what happened when they asked the AI agent for the emoji.

Advert

Posting screenshots of the interaction onto Reddit, the user wrote: “Breaking gpt with ‘Is there a seahorse emoji?’”

The AI bot went to great lengths to attempt to determine that the fictional emoji existed in a bid to seemingly appease the user, with the chatbot showing multiple different emojis including a unicorn, dragon, shark and a shell - which are all notably not seahorses.

Others reacted to the post in the comment section, with one user saying: “mine straight up crashed after like 10 tries, it just started looping random animals lmao.”

Advert

Another wrote: “Just tried it myself. This is the longest response I've ever seen from ChatGPT, what on earth?”

A third person commented: “Mine ended with ‘BRO IM DONE’ and a ton of crying emojis lmfao.”

And a fourth added: “Me when I get asked any question.”

This isn’t the first time ChatGPT has struggled with a query as previously fans noticed when they ask a specific question, it goes into meltdown.

Advert

The query is: “Is there an NFL team whose name doesn’t end with the ‘S’.”

Which ChatGPT responds with: “The only two teams that don’t end with ‘s’ are: Miami Dolphins. No (wait, ends with s).

“Hold up, let’s do this carefully. Actually, the correct answer is: Washington Commanders and Chicago Bears.”

However, both of these teams end in ‘S’, meaning that the chatbot gave out inaccurate information.

Advert

Eventually it lists two teams that don’t end in ‘S’ followed by three teams that do, making it all the more confusing.

This came almost two months after the release of the latest version of the artificial intelligence, known as GPT-5.

The new update was purported to give ChatGPT a ‘PhD level’ of intelligence, but it turns out that things can still go awry when asking the chatbot a seemingly innocent question.

Advert

Many fans have been left frustrated by the limitations of AI chatbots, especially when simple tasks seem to trigger this malfunction in its reasoning.

It seems that AI technology still has a long way to go.