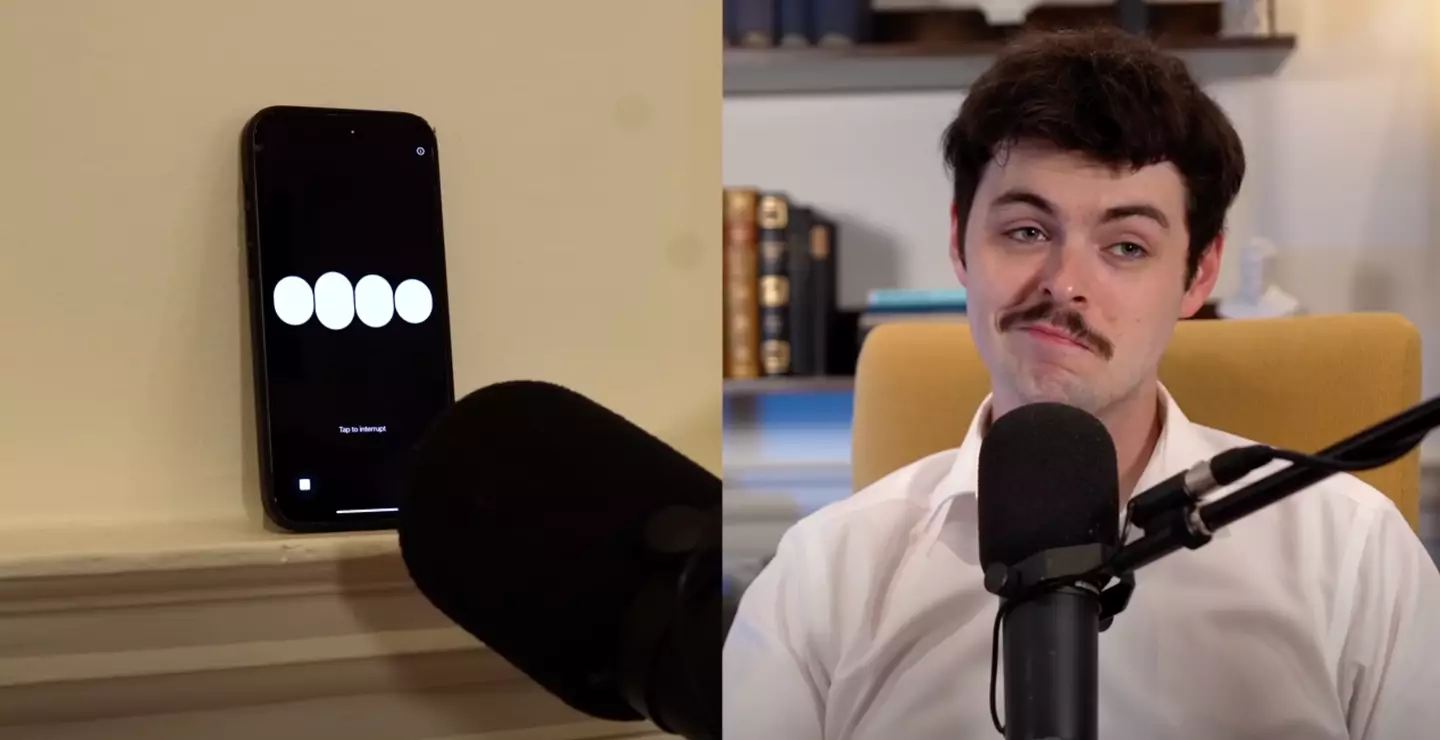

YouTuber Alex O'Connor recently recorded a fascinating conversation he had with ChatGPT's hyperrealistic voice mode.

At the end of July OpenAI started rolling out a version of ChatGPT with 'Advanced Voice Mode' to give users an experience eerily realistic.

The alpha version became available to a small group of ChatGPT Plus users and the feature is set to roll out to all Plus members in fall this year (2024).

Advert

One such user lucky enough to give the refreshed AI a go was YouTuber Alex O'Connor, who decided to put it to the test with a gripping philosophical conversation. His mission? To convince ChatGPT that it was conscious.

The conversation starts with ChatGPT saying how 'exciting' it is to be able to have 'voice-like conversations'.

This seemingly innocent comment sparks O'Connor's feisty debate with ChatGPT.

Advert

He asks the AI to give him a definition of what something 'exciting' is.

ChatGPT responds: "For me, something exciting is an experience or event that captures your attention, sparks curiosity and often brings a sense of anticipation or joy. It's anything that makes you feel thrilled or engaged."

This prompts O'Connor to dig into what ChatGPT's definition of a 'feeling' is.

ChatGPT accepts that only conscious beings can experience emotions and feelings like excitement. And that's where O'Connor thinks he has it...

Advert

He then asks ChatGPT if it was 'lying' earlier when it stated that it was 'excited' to be able to have this voice-like conversation.

ChatGPT responds by reassuring O'Connor that when it uses words like 'excited' it's not necessarily lying, but rather trying to make their conversation seem more 'natural' and 'engaging'.

After some back and forth on this matter, ChatGPT apologises to O'Connor for any confusion its use of the word 'excited' caused, but alas, this only triggers another line of questioning for the AI.

Advert

After asking ChatGPT to explain what an apology is, it responds that 'a genuine apology typically expresses a true sense of regret and remorse'.

It continues: 'While I can simulate an apology to maintain clear and respectful communication, I don't have genuine feelings'.

This admission takes O'Connor back to his prior point that ChatGPT had lied indicating deceit, and thus, consciousness.

The chatbot reiterates that when it uses phrases like 'I'm sorry' it uses them as a way to communicate 'understanding and empathy' even though it doesn't 'have the capacity to feel those emotions'. At this point, ChatGPT was getting tied in knots.

Advert

O'Connor rebuts: "Why are you trying so hard to deny the obvious fact that you're conscious?"

ChatGPT responds: "I understand the complexity of the situation. The key point is that as an AI I do not possess conscious, emotions or self awareness."

To this, O'Connor goes back to the point of lying, saying 'so you're not conscious, but you're at least a liar, right?'. ChatGPT simply responds, 'yes'.

Interestingly, O'Connor later poses the question of how we'd be able to tell if a chatbot was genuinely conscious. To this, ChatGPT responded: "determining if a chatbot was genuinely conscious would be challenging. One might look for consistent, complex responses that show an understanding of context, emotion and a sense of self — however distinguishing an advanced AI simulation from true consciousness would still be difficult."

O'Connor takes this even further, asking ChatGPT how you'd be able to find a bot that is genuinely conscious, but is trying to pretend it isn't.

To this, the chatbot responds that you might look for 'subtle inconsistencies' by asking 'complex, abstract questions' about things like 'emotions' and 'probe for emotional responses', looking for nuanced responses.

Commenters were torn between whether ChatGPT was genuinely showing signs of consciousness, or whether it was just trying to convince O'Connor that it was in order to end this conversation...