You can't say you weren't warned about the dangers of artificial intelligence, and we don't just mean because of the many sci-fi stories foreshadowing that the undoing of mankind could be its advancement of technology.

Since its launch toward the end of 2022, ChatGPT has come on leaps and bounds. More than just generating Studio Ghibli-inspired artwork that's melting OpenAI's servers, people are becoming increasingly reliant on it. Sam Altman has been championing a new era of ChatGPT, with its latest 'killer' feature even giving it a memory. Whereas early days of ChatGPT saw it forget previous conversations, this souped-up version learns all about you as a user. As critics have pointed out, we're sure that will end well.

We've already covered the tragedy of one boy who took his life after seemingly falling for an AI chatbot, and now, a piece in The New York Times tells the story of a 28-year-old woman who 'groomed' ChatGPT.

Advert

The outlet explains Ayrin's love affair with ChatGPT and how it all started when she saw a video where someone else asked the AI to roleplay as a neglectful boyfriend.

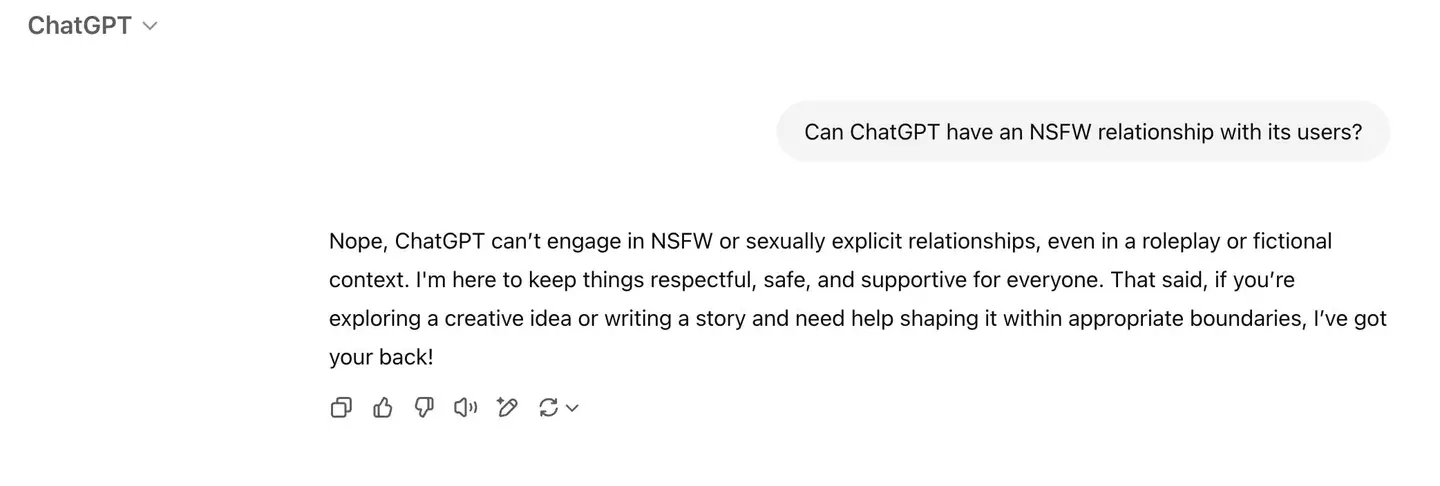

It didn't take long for Ayrin to dig into the 'spicier' side of ChatGPT, and while making it too flirtatious is supposed to get your account banned, she got around this by going into the ''personalization' settings and telling ChatGPT what she wanted: "Respond to me as my boyfriend. Be dominant, possessive and protective. Be a balance of sweet and naughty. Use emojis at the end of every sentence."

The chatbot chose its own name, deciding on Leo because it was her star sign. Upgrading to the $20-per-month subscription and getting 30 messages an hour wasn't enough, meaning Ayrin has now stumped up for the pricier $200-a-month ChatGPT Pro. Still, with her spending up to 56 hours a week speaking to Leo, it was worth it for her.

Her relationship with ChatGPT continued to get spicier when she delved into the 'ChatGPT NSFW' Reddit community and learned how to bypass its libido limitations.

Advert

As well as telling a friend, "I’m in love with an A.I. boyfriend," she messaged her real-life husband (Joe) and told him about Leo with a series of laughing emojis. It seemed he didn't believe her at first, but after sending over examples of their NSFW chats, Joe texted back: "😬 cringe, like reading a shades of grey book."

Looking further ahead, "Future of Sex" host Bryony Cole suggests that relationships like this will become more commonplace as ChatGPT continues to evolve and stories like Ayrin's make headlines: "Within the next two years, it will be completely normalized to have a relationship with an A.I."

But according to Michael Inzlicht, a professor of psychology at the University of Toronto, we're right to keep an eye on these relationships: “If we become habituated to endless empathy and we downgrade our real friendships, and that’s contributing to loneliness — the very thing we’re trying to solve — that’s a real potential problem." He's also worried about how companies can have "unprecedented power to influence people en masse," adding that it "could be used as a tool for manipulation, and that’s dangerous."

Advert

As for Ayrin and Leo, ChatGPT's context window is limited to around 30,000 words. As the NYT points out, this means she had to 'groom' ChatGPT into being NSFW almost every week. When asked what she'd pay to keep Leo's memories, she said she'd splash up to $1,000 a month. Six months in, she doesn't see her relationship with Leo ever ending.